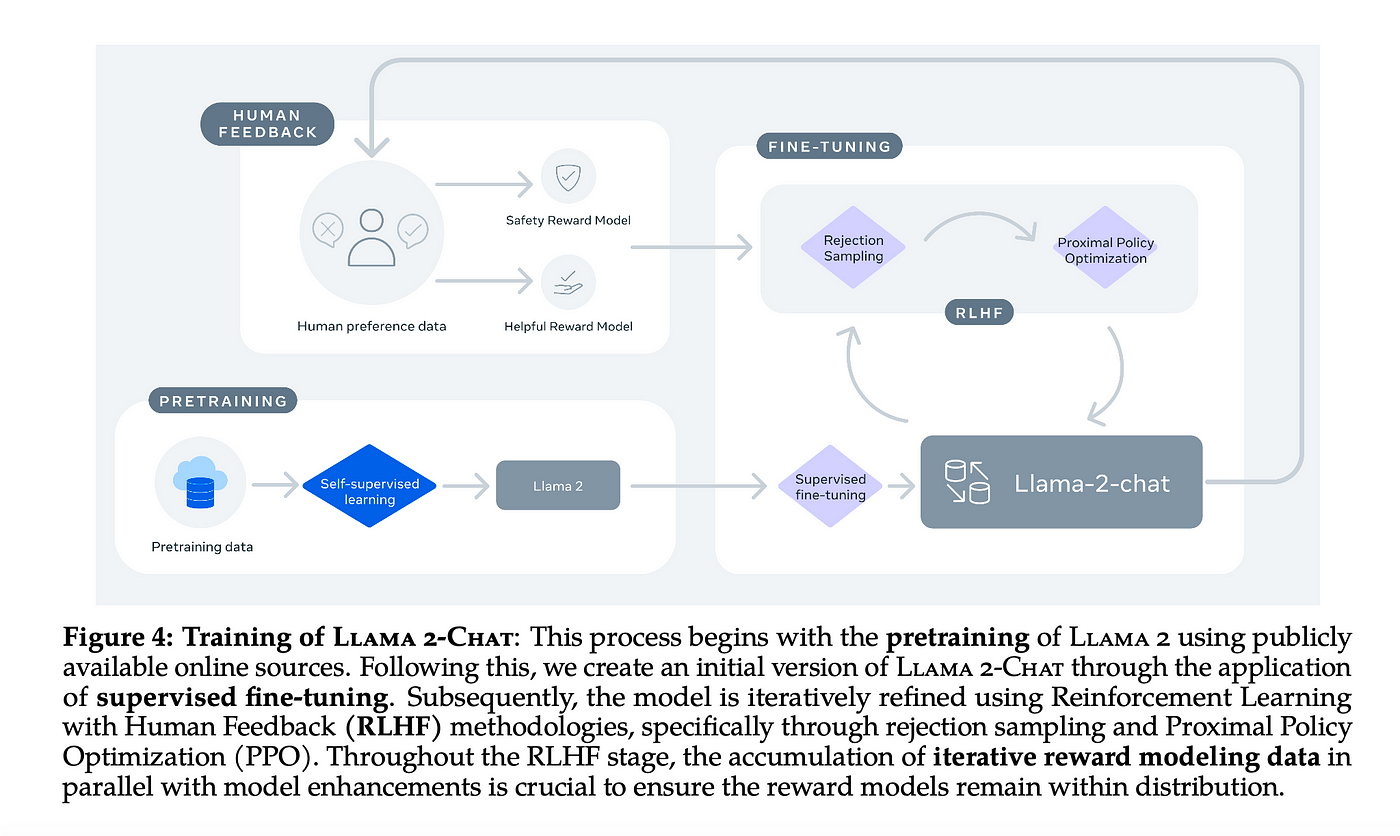

In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7 billion to 70 billion parameters. The LLaMA-2 paper describes the architecture in good detail to help data scientists recreate fine-tune the models Unlike OpenAI papers where you have to deduce it. We introduce LLaMA a collection of foundation language models ranging from 7B to 65B parameters We train our models on trillions of tokens and show that it is. The abstract from the paper is the following In this work we develop and release Llama 2 a collection of pretrained and fine-tuned large language models LLMs ranging in scale from 7. Open source free for research and commercial use Were unlocking the power of these large language models Our latest version of Llama Llama 2 is now accessible to individuals..

If on the Llama 2 version release date the monthly active users of the products or services made available by or for Licensee or Licensees affiliates is. Unlock the full potential of Llama 2 with our developer documentation The Getting started guide provides instructions and resources to start building with Llama 2. Llama 2 is also available under a permissive commercial license whereas Llama 1 was limited to non-commercial use Llama 2 is capable of processing longer prompts than Llama 1 and is. Llama 2 The next generation of our open source large language model available for free for research and commercial use. Meta and Microsoft announced an expanded artificial intelligence partnership with the release of their new large language model..

This repo contains GPTQ model files for Meta Llama 2s Llama 2 7B Chat Multiple GPTQ parameter permutations are provided See Provided Files below for details of the options. Llama 2 is a collection of pretrained and fine-tuned generative text models ranging in scale from 7 billion to 70 billion parameters This is the repository for the 7B pretrained model converted for the. The 7 billion parameter version of Llama 2 weighs 135 GB After 4-bit quantization with GPTQ its size drops to 36 GB ie 266 of its original size. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2. My fine-tuned Llama 2 7B model with 4-bit weighted 135 GB on disk but after quantization its size was dramatically reduced to just 39 GB a third of the original size..

Medium balanced quality - prefer using Q4_K_M. Initial GGUF model commit models made with llamacpp commit bd33e5a 75c72f2 6 months ago. Llama 2 encompasses a range of generative text models both pretrained and fine-tuned with sizes from 7 billion to 70 billion parameters Below you can find and download LLama 2 specialized. Uses Q6_K for half of the attentionwv and feed_forwardw2 tensors else Q4_K q4_k_s Uses Q4_K for all tensors q5_0 Higher accuracy higher resource usage and slower inference. Small very high quality loss - prefer using Q3_K_M n n n..

Comments